Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

Google Just Built an AI to Talk to Dolphins. Seriously.

4 min read Google just launched an AI model trained to understand dolphin sounds—yes, seriously. DolphinGemma analyzes clicks and whistles to build a shared “vocabulary” with dolphins. It’s not translation, but it’s a bold first step toward talking to another species. April 14, 2025 22:01

On April 14, 2025, Google, in partnership with the Wild Dolphin Project (WDP) and Georgia Tech, unveiled DolphinGemma—a 400-million-parameter AI model designed to analyze and generate dolphin vocalizations in an effort to decode how dolphins communicate.

How It Works:

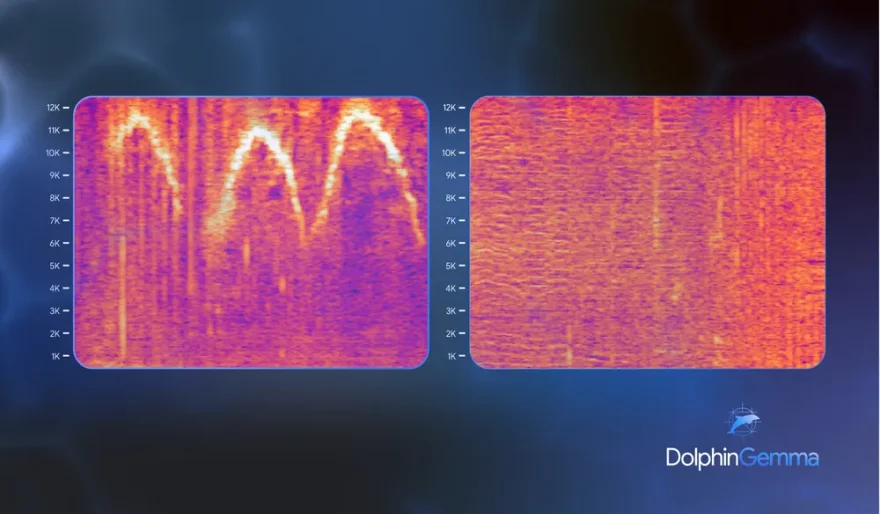

Built on Google’s open Gemma architecture, DolphinGemma uses a SoundStream tokenizer to process the complexity of dolphin sounds—like whistles, clicks, and burst pulses—and predict what sounds come next, just like how language models predict text.

It’s trained on 40 years’ worth of Atlantic spotted dolphin recordings from the WDP, making it one of the most comprehensive marine acoustic models ever built.

Why It’s a Game Changer:

Unlike older tools that required custom gear, DolphinGemma runs efficiently on mobile devices like the Pixel 6, with support coming for Pixel 9 this summer. This enables real-time fieldwork, giving marine researchers a lightweight, high-powered tool in the palm of their hands.

Enter the CHAT System:

DolphinGemma powers the Cetacean Hearing Augmentation Telemetry (CHAT) system, which uses synthetic whistles to associate specific sounds with objects like scarves or seagrass. When dolphins mimic these whistles, researchers get instant alerts via bone-conducting headphones, allowing for quick interaction.

It’s not about translating a dolphin "language" word-for-word—rather, it’s about building a shared symbolic vocabulary that enables basic two-way communication.

Looking Ahead:

-

Open Model Incoming: Google plans to release DolphinGemma this summer under an open model license.

-

Global Adaptation: Researchers will be able to fine-tune it for other cetacean species, although adjustments may be necessary due to vocal differences.

The Bigger Picture:

Dr. Denise Herzing of WDP sees this as a step toward genuine interspecies communication. But others are already speculating: could a model trained to detect subtle, nonlinear acoustic patterns have value beyond marine biology?

One wild idea floated: using similar models to identify patterns in crypto trading. There’s no confirmation—just curiosity.

Either way, DolphinGemma marks a turning point: not just in AI research, but in how humans might one day talk to animals.

AI Agents

AI Agents