Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

Google’s Gemma 3 Release: A New Addition to the Open Model Landscape

5 min read Google just released Gemma 3, a powerful open AI model with multimodal support, a 128K context window, and 140+ language capabilities. It claims to outperform OpenAI’s o3-mini & Llama 4-05B. Is this a true game-changer or just another model update? Thoughts? March 13, 2025 00:58

On March 12, 2025, Google introduced Gemma 3, an update to its series of lightweight, open models designed for developers. The release builds on the Gemini 2.0 research and technology, with four model sizes: 1 billion (1B), 4 billion (4B), 12 billion (12B), and 27 billion (27B) parameters. A key focus of Gemma 3 is optimizing efficiency across various hardware configurations, allowing it to run on setups ranging from laptops to workstations, leveraging either a single GPU or TPU.

Key Features of Gemma 3

- Multimodal Capabilities: The 4B, 12B, and 27B models support vision-language inputs, enabling applications such as image analysis, document summarization, and question answering. However, the 1B model remains text-only.

- Expanded Context Window: A significant upgrade from Gemma 2, the context window has expanded to 128k tokens, allowing the model to process larger amounts of input, including long-form text, multiple high-resolution images, and extended video transcripts.

- Multilingual Support: Gemma 3 is designed to understand and process over 140 languages, with 35 languages requiring no additional training, broadening its usability in global applications.

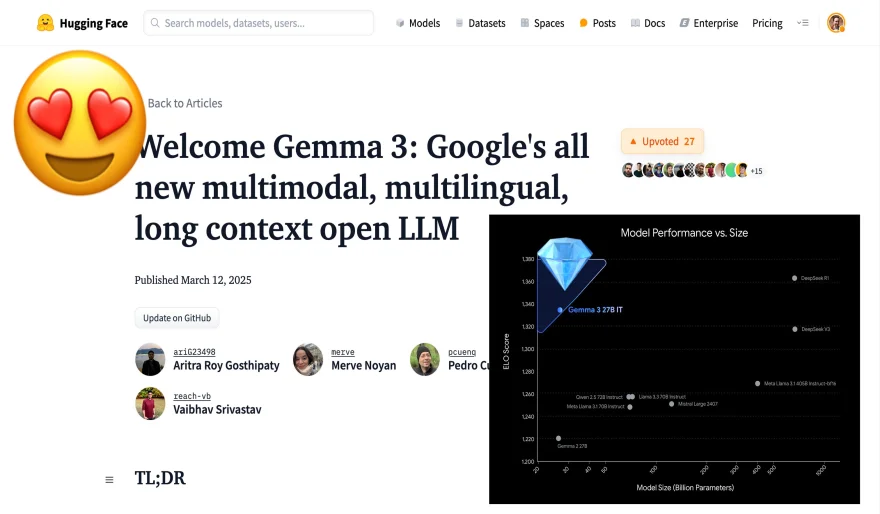

- Performance Benchmarks: According to Google’s preliminary human preference evaluations, Gemma 3’s 27B instruction-tuned model scored an Elo rating of 1338 on the LMSys Chatbot Arena, placing it among the top 10 models. However, performance comparisons with larger models such as Llama-405B, DeepSeek-V3, and OpenAI’s o3-mini will likely be subject to further independent evaluations.

- Function Calling & Structured Outputs: The model includes function calling and structured output capabilities, making it potentially useful for automation and AI-driven workflows.

- Quantized Versions for Efficiency: Google has also released official quantized versions (e.g., int4), aimed at improving memory efficiency without significantly compromising accuracy, which may benefit users operating on resource-constrained devices.

Deployment and Accessibility

Google has integrated Gemma 3 with widely used frameworks such as Hugging Face Transformers, PyTorch, JAX, Keras, and Ollama, while ensuring compatibility with diverse hardware including NVIDIA GPUs, Google Cloud TPUs, AMD GPUs (via ROCm), and CPUs (via Gemma.cpp). Developers can access and utilize the model through:

- Google AI Studio for quick experimentation.

- Hugging Face, Kaggle, and Ollama for downloads.

- Platforms like Vertex AI, Cloud Run, and local environments for deployment.

- Google Colab and custom datasets for fine-tuning.

Additionally, the 1B model (529MB) is optimized for mobile devices via Google AI Edge, achieving speeds of up to 2585 tokens per second. NVIDIA has also worked on optimizing Gemma 3 for various GPUs, from Jetson Nano to Blackwell chips, and the model is available via the NVIDIA API Catalog.

Additional Release: ShieldGemma 2

Alongside Gemma 3, Google introduced ShieldGemma 2, a 4B-parameter safety checker designed to detect potentially unsafe content in images. The system is capable of flagging elements such as violence or explicit material and can be customized to align with specific safety requirements.

Community, Research, and Safety Considerations

The Gemmaverse, Google’s broader developer community, has already seen over 60,000 community-created model variants, with Gemma models downloaded over 100 million times. Google has also launched the Gemma 3 Academic Program, which provides researchers with $10,000 in Google Cloud credits for experimentation and model training.

On the safety and responsibility front, Google reports that Gemma 3 has undergone rigorous testing to mitigate risks related to misuse and unintended data reproduction. The model is designed to reduce verbatim text outputs and limit personal data leakage, although independent audits may provide further insight into its security and robustness.

Final Thoughts

With its expanded capabilities, multimodal support, and focus on efficiency, Gemma 3 represents another step in Google’s ongoing efforts to provide developer-friendly AI models. While early benchmarks suggest competitive performance, the model’s real-world effectiveness will ultimately be determined by further testing, adoption, and community feedback.

AI Agents

AI Agents