Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

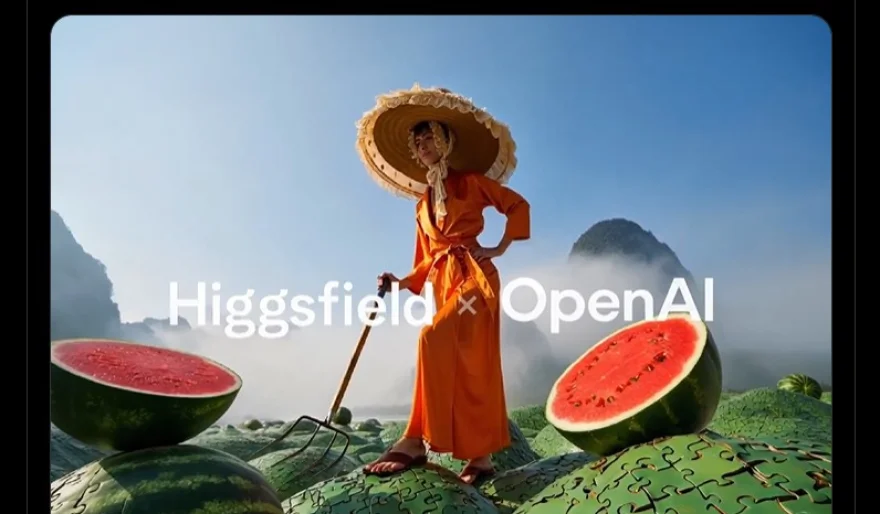

OpenAI’s image model lands in Higgsfield — stylize and animate in 30+ iconic looks with one prompt

3 min read OpenAI’s image model now powers Higgsfield, a Canva-meets-Midjourney platform for animated content. Users can turn prompts or images into stylized visuals and animate them in seconds — no editing skills needed. It’s a big step toward making AI-driven motion design fast, easy, and accessible to all. April 25, 2025 10:35

OpenAI’s image generation model is now integrated into Higgsfield, a rising creative AI platform that’s shaping up to be the Canva-meets-Midjourney for motion content.

This update means users can now turn any text prompt or static image into a fully stylized visual — and then animate it — all within seconds. The styles range from pop culture staples like Ghibli, Simpsons, Pixar, to noir, anime, vintage, and hyperrealism. Each result can then be brought to life using Higgsfield’s cinematic camera tools, giving depth, zoom, pan, and motion cues automatically, no editing skills needed.

Here’s how it works:

-

Input: A prompt (e.g., “a cat detective in Tokyo, at night”) or an existing image

-

Style selection: Choose from 30+ curated visual styles

-

Animate: Hit animate, and Higgsfield applies smooth camera motion to give the image a dynamic, scene-like feel

-

Output: A short animated clip or video, ready to download or post

What this means for the AI creative ecosystem

Higgsfield isn’t just using OpenAI’s model as a backend tool — it’s reimagining the front-end experience for everyday creators, marketers, and storytellers. Instead of hopping between image gen, video editors, and animation software, Higgsfield offers a one-click pipeline for both looks and movement.

This also signals a shift in how OpenAI’s models are being embedded into consumer-facing products. By enabling platforms like Higgsfield, OpenAI’s visual tech becomes more accessible and expressive, especially for those without a design or animation background.

As AI video becomes the next arms race, this partnership adds a new player into the conversation — one focused not just on realism, but on stylized, culture-driven motion content.

We’re watching the early foundations of a new kind of creative suite. Fast, AI-native, and dripping with visual personality.

AI Agents

AI Agents