Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

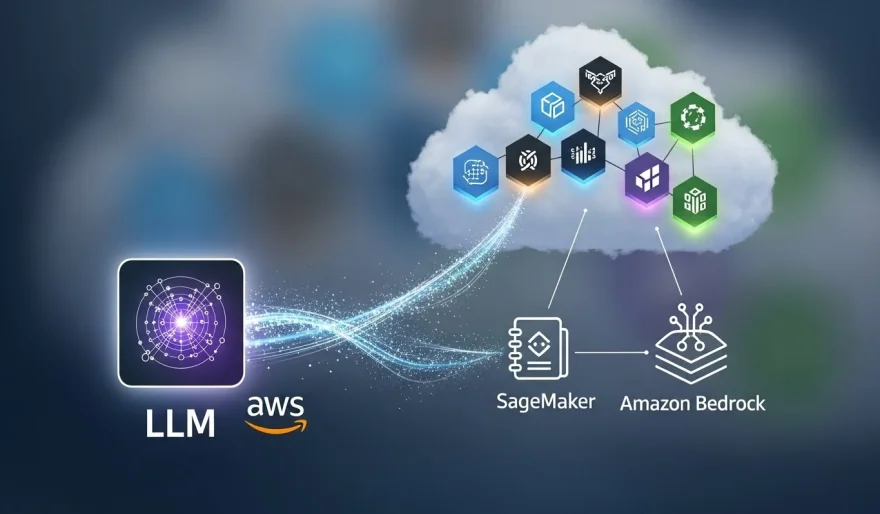

AWS makes custom LLMs effortless with SageMaker and Bedrock

6 min read AWS unveils serverless SageMaker and Bedrock tools, letting enterprises build and fine-tune custom LLMs with ease — pushing Nova Forge and frontier AI into the spotlight. December 03, 2025 16:50

Amazon Web Services is making a bold push into custom large language models (LLMs), unveiling new tools designed to make model building and fine-tuning as easy as clicking a button — or just telling SageMaker what you want in plain English.

This announcement comes hot on the heels of Nova Forge, AWS’s $100K/year service to build custom Nova models for enterprise clients. Now, AWS is expanding SageMaker AI and Amazon Bedrock capabilities, letting enterprises create frontier LLMs that are optimized for their unique data, workflows, and use cases.

🔍 What’s New?

1. Serverless Model Customization in SageMaker

Developers can now build models without worrying about infrastructure.

-

Self-guided: point-and-click interface

-

Agent-led: natural language prompts let you tell SageMaker exactly what you need

Example: a healthcare company can create a model fine-tuned to medical terminology, with SageMaker handling the heavy lifting automatically.

2. Reinforcement Fine-Tuning in Bedrock

-

Developers can pick a reward function or pre-set workflow

-

Bedrock automates end-to-end model fine-tuning

-

Supports both Amazon’s Nova models and certain open-source models (e.g., DeepSeek, Meta’s LLaMA)

3. Enterprise Differentiation

AWS emphasizes that customization is key to standing out:

“If my competitor has access to the same model, how do I differentiate myself?” — Ankur Mehrotra, AWS

Custom models let enterprises optimize for their brand, data, and use case, rather than relying on off-the-shelf models.

🌐 Why This Matters — For Investors and Enterprise AI Leaders

-

Infrastructure-free AI adoption: Removing compute and setup headaches accelerates LLM integration.

-

Customization as moat: Enterprises can now differentiate themselves with tailored AI — a potential competitive advantage for AWS.

-

Leveling the playing field: While OpenAI, Anthropic, and Gemini dominate enterprise mindshare today, AWS is positioning itself as the go-to cloud for enterprise LLM control.

-

Frontier LLM focus: By targeting the cutting edge, AWS aims to close the gap in adoption and relevancy in the AI arms race.

⚖️ Pros & Cons — Clear-Eyed View

Pros:

-

Simplifies LLM deployment for enterprises

-

Customization drives differentiation and loyalty

-

Serverless and agent-led tools reduce friction for adoption

-

Supports both proprietary and open-source frontier models

Cons / Risks:

-

AWS still lags in enterprise mindshare compared to OpenAI, Anthropic, Gemini

-

$100K/year Nova Forge may limit adoption to high-end clients

-

Success hinges on actual ease-of-use and reliability of these tools

-

Competitive pressure from Microsoft Azure, Google Cloud, and specialist AI startups remains intense

🔮 Big Picture

AWS is betting that customization is the next frontier in LLM adoption. Enterprises no longer just want access to the smartest model — they want models that are their own.

By simplifying infrastructure, automating fine-tuning, and providing agent-led guidance, AWS could shift its AI strategy from lagging behind the big names to offering something unique and indispensable.

The question now: can AWS turn these tools into wide adoption before OpenAI and Gemini consolidate their lead?

AI Agents

AI Agents