Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

Building the AI Polygraph: Can AI Revolutionize Lie Detection?

7 min read AI is being explored as a more accurate alternative to polygraphs for detecting lies, with methods like analyzing vital signs and language patterns. Research shows AI can identify lies with 67% accuracy, better than humans, but still not reliable for widespread use. Ethical concerns include the potential erosion of trust in social interactions and the impact on relationships. While AI could help fight fake news, it requires more testing. The growing use of AI in deception detection raises significant ethical and practical challenges. April 28, 2025 09:17

As artificial intelligence continues to evolve, one of the intriguing questions is whether these technologies can revolutionize the field of analyzing human behavior — particularly when it comes to detecting lies.

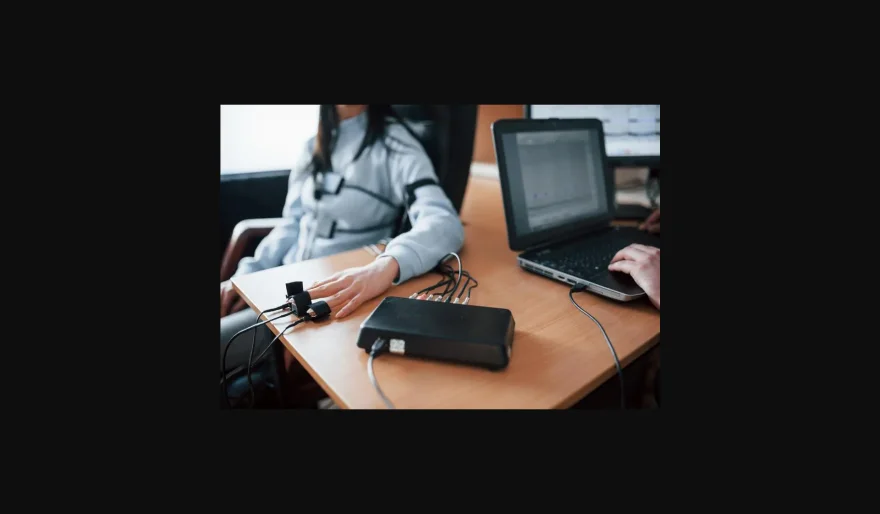

The polygraph, often dubbed the "lie detector," has long been criticized for its inaccuracy. With its reliance on measuring vital signs like heart rate and blood pressure, the polygraph has frequently been shown to offer unreliable results, and its outcomes are often inadmissible in court. Despite its flaws, the polygraph has still been used in a variety of settings, with some claiming it has led to unjust convictions.

In contrast, AI offers a new approach to truth-seeking applications. Leveraging its vast data processing power, AI can observe human behavior with far more precision, offering multiple pathways to potentially identify deception.

AI Approaches to Detecting Lies AI researchers have proposed two primary methods for applying artificial intelligence to lie detection:

-

Analyzing Vital Sign Responses: Just as the polygraph does, AI could assess a person’s physiological responses during interrogation, but with much more detailed and accurate comparative analysis.

-

Examining Language Tokens: Another promising avenue involves using AI to analyze the actual words people say, applying logic, reasoning, and pattern recognition to detect inconsistencies or contradictions in their statements.

The concept behind this is simple: the more lies a person tells, the more tangled their web becomes, eventually leading to the truth being revealed in its simplest form. This raises the question — could AI’s precision provide more reliable results than human judgment?

Findings from Research Labs An MIT Technology article from last year covered the work of Alicia von Schenk and her colleagues at the University of Würzburg in Germany, who experimented with using AI to detect false statements. According to their findings, AI was able to identify lies with 67% accuracy, compared to the 50% accuracy rate of humans.

While 67% might not seem groundbreaking, it's notably better than human performance. However, the scientists also pointed out a critical flaw: even AI's 67% accuracy is not sufficient to make it a reliable tool for widespread use, especially in real-world applications where binary judgments of "lie" versus "truth" are often oversimplifications.

The Ethical Dilemma of Trust One of the most significant issues raised by von Schenk and her team is the potential erosion of trust. As Jessica Hamzelou writes for MIT, AI tools could be useful for spotting misinformation, such as the lies we encounter on social media. However, applying these tools too aggressively might undermine trust, a core element of human relationships.

"If the price of accurate judgments is the deterioration of social bonds, is it worth it?"

This raises an important question about the cost of having AI systems that can detect every falsehood: could they lead to a breakdown in human social interaction, where people become increasingly suspicious of each other’s words, even in personal conversations?

The Issue of Fake News and Disinformation Despite these concerns, von Schenk emphasizes that the rise of fake news and disinformation provides a strong argument for the use of AI in identifying deception. However, the technology must be thoroughly tested and proven to be significantly more effective than human judgment before it can be adopted more widely.

The Anxiety Response in AI In a related area of research, scientists have found that AI systems can “display” anxiety when exposed to human responses that focus on sensitive or violent topics. This "anxiety" isn’t genuine, of course, but rather a reflection of the AI’s training data — as the AI has learned from human responses about violence, which typically trigger feelings of anxiety.

While AI doesn’t experience emotions as humans do, the fact that it can replicate such responses raises intriguing questions about how AI systems might influence human behaviors. Is this just a simulation of human emotions, or is there a deeper connection between human social interaction and AI outputs?

Why It Matters Despite the advances, we are still far from developing a true "AI polygraph." The technology is promising, but there are many ethical and practical hurdles to overcome. Whether AI can be trusted with such responsibilities or whether it risks undermining basic human interactions remains an open question. As AI systems grow more sophisticated, the need for careful analysis, and perhaps even game theory, will be essential to ensure they are used ethically.

As we continue to push AI boundaries, these questions — about truth, trust, and human interaction — will become even more important. Are we ready for machines that can judge our honesty? And if we are, what kind of world will we create in the process?

AI Agents

AI Agents