Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

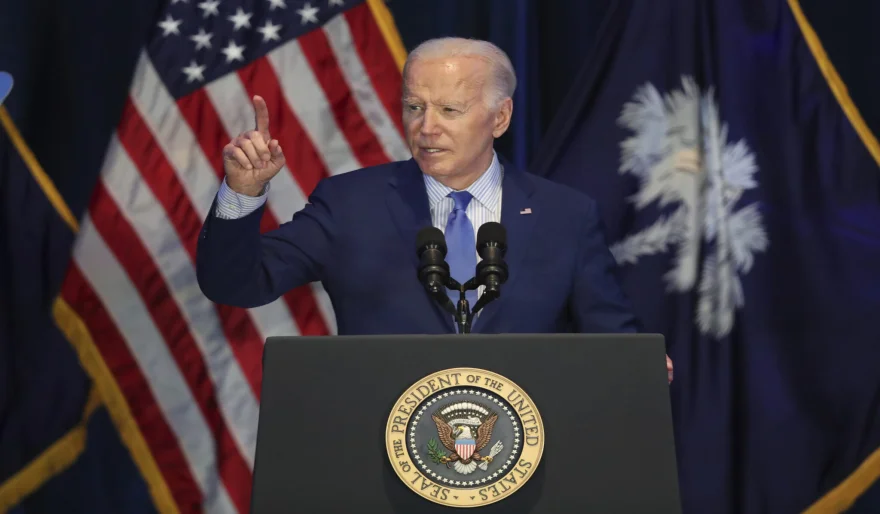

Cracking Open the Black Box: US Govt Demands AI Safety Transparency

6 min read Big news in AI transparency! The U.S. government takes a bold leap, mandating major AI companies to report safety test results to the Department of Commerce. A game-changer for accountability and a new era of responsible AI development! January 30, 2024 06:44

The days of AI operating as a shrouded mystery box may be numbered. The U.S. government is taking a bold step towards increased transparency and accountability by requiring major AI companies to report their safety test results to the Department of Commerce. This groundbreaking move marks a paradigm shift in how we approach the development and deployment of this powerful technology.

Why the Scrutiny?

As AI becomes woven into the fabric of our lives, from healthcare to transportation to entertainment, concerns about its potential dangers have rightfully come to the fore. Biases in algorithms, unintended consequences of AI actions, and the opaqueness of how these systems operate all contribute to a growing sense of unease. This mandate for safety testing and reporting aims to:

- Shine a light on potential risks: By requiring companies to openly share their safety assessment methodologies and findings, the government hopes to expose and address potentially harmful biases, vulnerabilities, and unintended consequences before they cause real-world damage.

- Level the playing field: This new regulation ensures all major AI players are held to the same standard of safety scrutiny, promoting a more responsible and ethical development landscape.

- Build public trust: Openness and transparency are crucial for earning public trust in AI. This move signals a commitment to responsible development and helps alleviate public concerns about this powerful technology.

But is it enough?

While this marks a significant step in the right direction, questions remain:

- How comprehensive will the testing requirements be? Will they cover all facets of AI systems, from data training to deployment and post-release monitoring?

- Will the reported data be accessible to the public? Transparency relies on public scrutiny, so ensuring clear and accessible reporting is crucial.

- How will enforcement work? What penalties will be levied on companies that fail to comply or whose safety tests uncover significant risks?

These questions highlight the need for ongoing dialogue and refinement of this groundbreaking policy. Nonetheless, the U.S. government's initiative sets a strong precedent for holding AI companies accountable and fostering a more responsible and trust-worthy future for this transformative technology.

As we navigate the increasingly complex world of AI, fostering responsible development and ensuring public trust is paramount. This move by the U.S. government is a powerful step in the right direction, one that deserves our attention and continued discussion.

AI Agents

AI Agents