Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

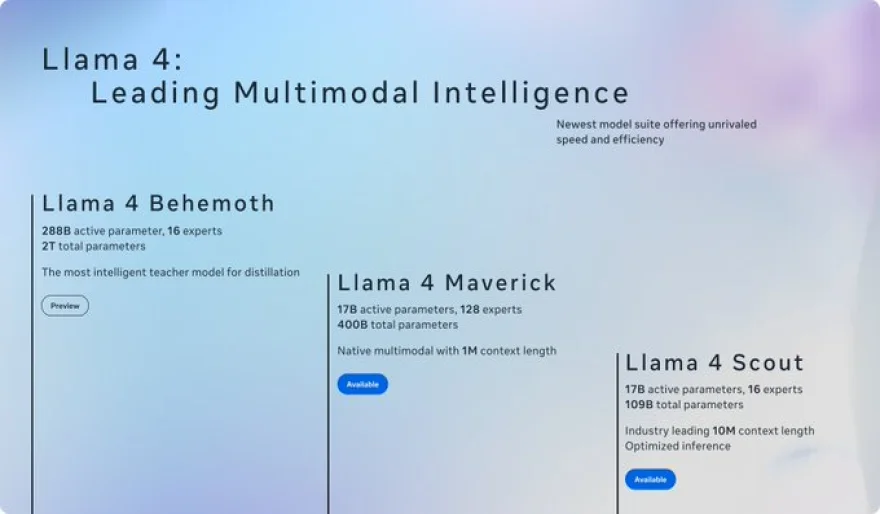

Meta AI Launches Llama 4 Models — Scout, Maverick, and the Massive Behemoth

4 min read Meta just dropped Llama 4—featuring Scout (10M token context), Maverick (beats GPT-4o on key tasks), and Behemoth (2T param MoE monster still training). Open-source, multimodal, and cheaper to run. The AI wars in 2025 just got a major plot twist. April 05, 2025 22:41

Meta AI has just released the Llama 4 model family as of April 5, 2025, marking a major leap in open-source AI. Known for its developer-friendly approach, Meta's latest lineup includes models that rival—and in some benchmarks, surpass—GPT-4o and Gemini 2.0. Here's what’s new and why it matters:

The Details: Models in the Llama 4 Lineup

1. Llama 4 Scout

-

Lightweight and highly efficient

-

Tailored for document summarization, reasoning over large codebases

-

Supports a massive 10 million token context window, ideal for long documents and multimodal input

-

Prioritizes speed and versatility in processing

2. Llama 4 Maverick

-

Designed for general chat, creative writing, and coding

-

Outperforms top competitors like GPT-4o and Gemini 2.0 on several benchmarks (reasoning, multilingual, and code generation)

-

Notably cost-efficient—up to 10x cheaper than alternatives

3. Llama 4 Behemoth (preview model)

-

Still in training, but already turning heads

-

Features 288B active parameters in a Mixture-of-Experts (MoE) architecture totaling 2 trillion parameters

-

Trained on 30 trillion multimodal tokens

-

Aims to serve as a “teacher” model for distilling Scout and Maverick

-

Said to outperform GPT-4.5 in STEM-related tasks

Key Innovations and Features

-

Multimodal Understanding: Native support for text, images, and possibly video, trained on large amounts of unlabeled data

-

Mixture-of-Experts (MoE): Efficient architecture that only activates a subset of parameters (e.g. 288B out of 2T) depending on the task

-

Massive Context Window: Scout’s 10 million tokens allow seamless handling of extremely long or complex inputs

-

Tuned for Openness: Meta adjusted the models to provide more balanced, less-restrictive answers to "contentious" prompts

Availability & Licensing

-

Scout and Maverick: Freely available at llama.com and through partners like Hugging Face

-

Behemoth: Not yet publicly released, but previewed for future use

-

Licensing Restrictions:

-

No use or distribution in the EU (due to AI Act/GDPR)

-

Companies with 700M+ monthly active users need special licensing approval from Meta

-

Training & Strategic Context

-

Scale: Trained using over 100,000 Nvidia H100 GPUs, a 10x increase over Llama 3

-

Investment: Meta has reportedly spent up to $65B on AI infrastructure in 2025

-

Motivation: The rise of efficient models from Chinese AI lab DeepSeek accelerated Meta’s timeline

-

Delays: Originally expected earlier in April, launch was pushed due to performance lags in math/reasoning (now resolved)

Use Cases and Integration

-

Scout: Ideal for summarization, analysis of large codebases, and long-form context tasks

-

Maverick: Strong in chat applications, writing, and programming

-

Behemoth: Poised to be a powerhouse in AI research and future model development

-

Already in Use: These models power Meta AI assistants on WhatsApp, Messenger, and Instagram, now live in 40 countries

Community Buzz

On April 5, users on X called Llama 4’s features “insane” and “a big surprise,” especially Scout’s unprecedented context length and Behemoth’s scale. While early sentiment is excited, independent validation of benchmarks will be key.

Why It Matters

Llama 4 cements Meta’s place as a serious open-source AI player.

With multimodal inputs, massive scale, and low deployment costs, these models could reshape everything from enterprise search and education to creative tools and personal assistants.

And with Behemoth still training, this is likely just the beginning.

AI Agents

AI Agents