Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

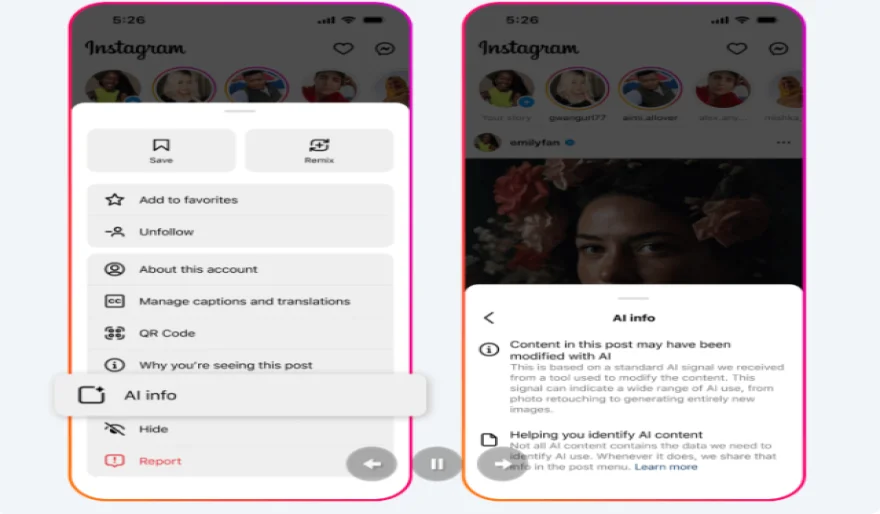

Meta is making its AI info label less visible on AI-modified content

3 min read Meta, the parent company of Facebook and Instagram, is reportedly making its AI information label less visible on content that has been edited or modified by AI tools. September 13, 2024 06:24

Meta, the parent company of Facebook and Instagram, is reportedly making its AI information label less visible on content that has been edited or modified by AI tools. This move raises concerns about transparency and the potential for users to be misled about the authenticity of the content they encounter on these platforms.

A less visible AI label could make it more difficult for users to identify content that has been manipulated or generated by AI. This could increase the risk of misinformation and deepfakes spreading on these platforms. Additionally, a lack of transparency could erode trust in the platform and its content, potentially leading to negative consequences for Meta's reputation.

While Meta may argue that a less prominent label is necessary to improve the user experience and avoid cluttering the platform with excessive information, it is crucial to balance this with the need for transparency. Users have the right to know when the content they are consuming has been altered or generated by AI.

The issue of AI-generated content has become increasingly important as AI technology continues to advance. Social media platforms must take steps to ensure that users are aware of the potential risks associated with this type of content. A transparent approach, including clear labeling of AI-generated or edited content, is essential for maintaining trust and integrity in the digital world.

It remains to be seen how this change will impact user trust and the overall integrity of content on Meta's platforms. As AI technology continues to evolve, it is essential for social media companies to prioritize transparency and ethical practices.

AI Agents

AI Agents