Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

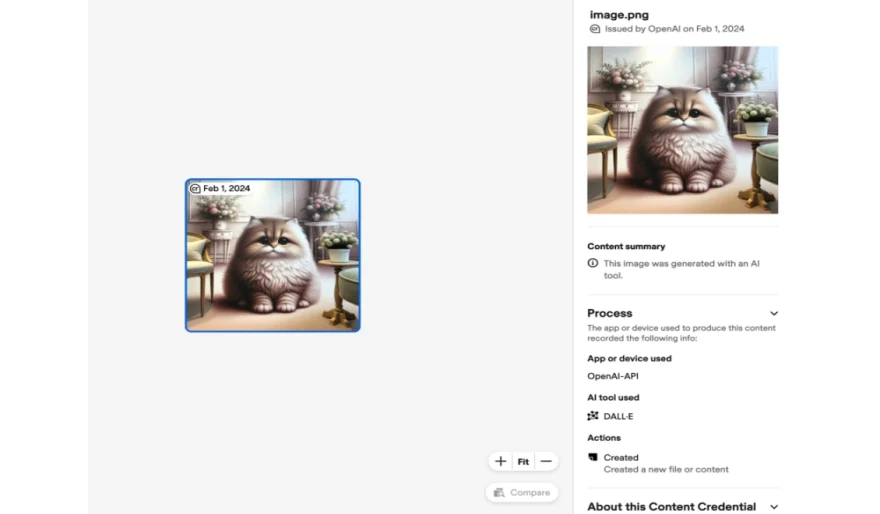

OpenAI Unveils DALL-E 3's Identity Badge

7 min read OpenAI adds watermarks to DALL-E 3 generated images, aiming for transparency. But does this clutter up creativity or enhance accountability? A debate ignites in the world of AI-generated imagery. February 07, 2024 08:11

The world of AI-generated imagery just got a bit more transparent (and potentially a bit more cluttered). OpenAI, the research institute behind the powerful DALL-E 3 image generation model, has announced the addition of watermarks to its creations. Buckle up, because this news has sparked a debate worth diving into.

So, what's the watermark all about?

Imagine you encounter a mind-blowing image online: a photorealistic portrait of a astronaut riding a horse through a nebula. But is it real, or a product of AI wizardry? OpenAI's new watermark aims to answer that question. It's a subtle badge embedded in the image, adhering to the Content Credentials watermark, an industry-wide standard. This tiny mark whispers two crucial things: 1. This image is AI-generated. 2. It follows ethical guidelines for transparency.

Why the watermark wave?

As AI-generated content becomes increasingly realistic, concerns about misinformation, deepfakes, and ethical considerations are swirling. This watermark acts as a shield, promoting transparency and helping users make informed decisions about the content they see. Additionally, it addresses the murky waters of copyright and ownership, clarifying who holds the reins of these AI-born creations.

But are watermarks the silver bullet?

Opinions, much like images, can be diverse. While some hail this move as a responsible step towards ethical AI development, others raise concerns. Some find the watermarks visually distracting, potentially hindering artistic expression. Others question their effectiveness in preventing misuse or misattribution. The debate is on, and the future of watermarks in the AI art world remains an open canvas.

AI Agents

AI Agents