Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

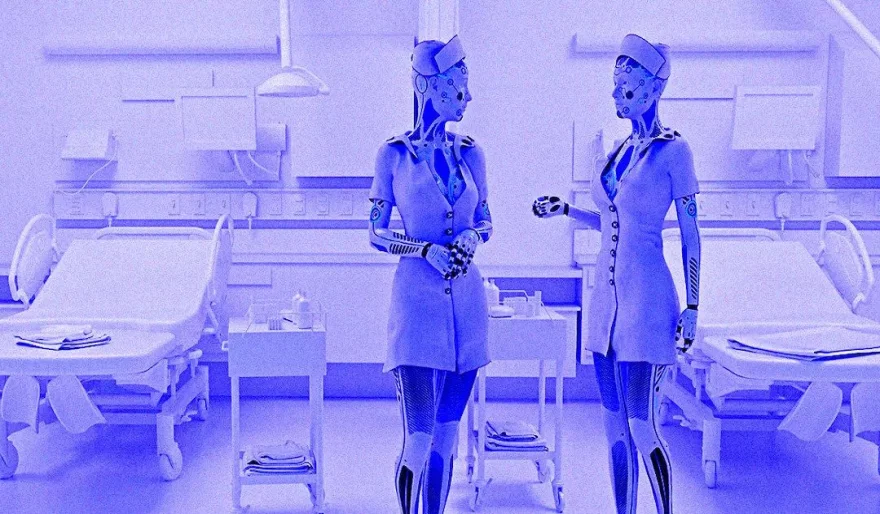

The Role of Chatbots in Healthcare: How Doctors are Using AI to Communicate with Empathy

3 min read Doctors are adopting OpenAI's ChatGPT, an AI chatbot, to foster empathy in patient communication, per NYT. It highlights the challenge of harmonizing medical advice and human compassion. June 13, 2023 07:08

Doctors are turning to OpenAI's ChatGPT, a popular AI chatbot, for more empathetic communication with patients, according to the New York Times. This raises questions about balancing medical guidance with human compassion.

Some medical professionals are even using ChatGPT to deliver difficult news, a practice that Microsoft, a partner of OpenAI, has reservations about. The idea of a chatbot delivering bad medical news feels strange to some patients.

One advantage of ChatGPT is its ability to explain medical concepts in simple terms, without the use of confusing jargon. This can help bridge the gap between doctors and patients who struggle to understand complex medical language.

Christopher Moriates, who worked on a project using ChatGPT to inform patients about alcohol use disorder treatments, highlights how even seemingly simple words can be misunderstood. The challenge lies in effective communication that patients can truly comprehend.

Gregory Moore, formerly of Microsoft, was amazed when he sought advice from ChatGPT on supporting a friend with advanced cancer. However, not everyone is convinced that relying on a chatbot to replace human interaction is a wise choice in healthcare.

Compassion plays a vital role in patient care, and replacing it with an AI tool that lacks the ability to differentiate right from wrong could have negative consequences. Patient evaluations of healthcare often hinge on the empathy they receive.

Stanford Health Care's Dev Dash expresses concerns about physicians using chatbots like ChatGPT to guide clinical decision-making. He believes it may not be appropriate and raises ethical issues.

The ethical dilemma of using chatbots in healthcare is complex. While patients may not always be aware they're interacting with a chatbot, the impact on non-life-threatening situations remains uncertain. We must grapple with these ethical challenges going forward.

AI Agents

AI Agents